Difference between revisions of "OpenMP"

| (42 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[Category:Pragmatic Programming]] | [[Category:Pragmatic Programming]] | ||

| − | '''' | + | ''''OpenMP: Shared memory parallism'''' |

=Introduction= | =Introduction= | ||

| − | + | Computers. They're handy right? Email, buying stuff from Amazon.. You could even write a program. Create your own application. Indeed, for people of a certain mindset--people like you and me--we can do all sorts of interesting things like simulating the natural world, and in the process look at questions like, "will Greenland melt if we carry on polluting the atmosphere as we are?" and "what will be the consequences if it does melt?". | |

| − | Sometimes it's handy to have more than one computer. Let's say that we have a new whizz-bang weather model that takes 26 hours to work out what the weather will do tomorrow. " | + | Sometimes it's handy to have more than one computer. Let's say that we have a new whizz-bang weather model that takes 26 hours to work out what the weather will do tomorrow. "Very nice. Superb accuracy," you say, "but, alas, about as much use as a chocolate teapot." In order for the model to be useful in the real world, we need it to run faster. We need to divide up the work it does and run it over two, or more computers. We need to enter the world of parallel programming. |

| − | "Hippee!" we cry, but a word of caution. Getting models to work in parallel is a lot | + | "Hippee!" we cry, but a word of caution. Getting models to work reliably in parallel is a lot--I'll say it again--''a lot'' harder than getting them to work on a single processor. Before setting out down the road, it is well worth checking that you really do need your model to run faster, and that you've explored all other avenues to speed up your model before embarking on a parallelisation project. |

| − | + | Still keen? OK, let's get stuck in. | |

=A Necessary Aside= | =A Necessary Aside= | ||

| − | We'll look at some parallel code | + | We'll look at some parallel code in a moment--I promise--but before we do we must take a look at some simple serial code and ask ourselves whether we've been as smart as we can and made the code run as fast as it can. I know you're keen to crack on, but trust me, it's '''really''' important to make sure that your serial code '''optimised''' before converting it into parallel code. If you don't, any inefficiencies in your code are just going to get magnified as it is run on several processors at the same time, and that's not what you want. With that in mind, be sure to look at the course on [http://source.ggy.bris.ac.uk/wiki/Profiling performance analysis] before starting on any parallel programming endeavours. Indeed, if you are lucky, you may find that your newly optimised code has been sped-up sufficiently and you don't need to do anything else to it! |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=OpenMP= | =OpenMP= | ||

| − | There are a number of different ways to create parallel programs, and we're going to start with one approach, called OpenMP. There a number of reasons for this: | + | There are a number of different ways to create parallel programs, and we're going to start with one approach, called '''OpenMP'''. There a number of reasons for this: |

| − | # It's | + | # It's widely available, |

| − | # It's good for the muli-core processors that we find in a lot of computers | + | # It's good for the muli-core processors that we find in a lot of computers these days, |

| − | # It's fairly easy to use | + | # It's fairly easy to use, |

| − | # and it's based upon the not-so-minde-bending concept of | + | # and it's based upon the not-so-minde-bending concept of '''threads''' |

[[Image:Threads.gif|center|A schematic showing a multi-threaded process]] | [[Image:Threads.gif|center|A schematic showing a multi-threaded process]] | ||

| Line 42: | Line 32: | ||

<pre> | <pre> | ||

| − | svn co | + | svn co https://svn.ggy.bris.ac.uk/subversion-open/openmp/trunk ./openmp |

</pre> | </pre> | ||

=Hello, world= | =Hello, world= | ||

| − | + | Once you have the examples, do the following: | |

<pre> | <pre> | ||

| + | cd openmp | ||

cd examples/example1 | cd examples/example1 | ||

| − | |||

| − | |||

</pre> | </pre> | ||

| − | '''Tada!''' Just like the old classic 'hello, world', but this time run in parallel on as many processors as you have available on your machine. Good eh? Now, how did that | + | You may have a number of compilers at your disposal. The makefile can handle GNU, PGI and INTEL. In order to compile using the Intel compiler, for example, type: |

| + | |||

| + | <pre> | ||

| + | make COMP=INTEL | ||

| + | </pre> | ||

| + | |||

| + | Once compiled, you can run the program: | ||

| + | |||

| + | <pre> | ||

| + | ./hello_f90.exe | ||

| + | </pre> | ||

| + | |||

| + | (Note that if you are using PGI, you will need to set an enviromnent variable from the outset: export OMP_NUM_THREADS=4. More of that later..) | ||

| + | |||

| + | '''Tada!''' Just like the old classic 'hello, world', but this time run in parallel on as many processors as you have available on your machine. Good eh? Now, how did we do that? | ||

Take a look inside the file '''omp_hello_f90.f90'''. First up we have '''used''' a Fortran90 module containing routines specific to OpenMP: | Take a look inside the file '''omp_hello_f90.f90'''. First up we have '''used''' a Fortran90 module containing routines specific to OpenMP: | ||

| Line 67: | Line 70: | ||

<pre> | <pre> | ||

omp_get_num_threads() | omp_get_num_threads() | ||

| + | </pre> | ||

| + | |||

| + | and | ||

| + | |||

| + | <pre> | ||

| + | omp_get_thread_num() | ||

</pre> | </pre> | ||

| Line 79: | Line 88: | ||

These lines specify that the '''master thread''' should fork a parallel region. From the code, you will see that all the threads on the team will get their '''thread ID'''--through calls to the OpenMP library--and will print it. The master thread will also ask how many threads have been spawned by the '''OpenMP runtime environment''', and will print the total. | These lines specify that the '''master thread''' should fork a parallel region. From the code, you will see that all the threads on the team will get their '''thread ID'''--through calls to the OpenMP library--and will print it. The master thread will also ask how many threads have been spawned by the '''OpenMP runtime environment''', and will print the total. | ||

| − | Notice that the variables '''nthreads''' and '''tid''' have been marked as '''private'''. This means that seperate copies of the these variables will be kept for each thread. This is essential, or else the print statement, 'Hello, world from thread = ' would get all mixed up, right? Try deliberatly mucking things up. Go on, see what happens if you delete '''tid''' from the private list. | + | Notice that the variables '''nthreads''' and '''tid''' have been marked as '''private'''. This means that seperate copies of the these variables will be kept for each thread. This is essential, or else the print statement, 'Hello, world from thread = ' would get all mixed up, right? Try deliberatly mucking things up. Go on, see what happens if you delete '''tid''' from the private list. You can always use, e.g.: |

| + | |||

| + | <pre> | ||

| + | svn revert omp_hello_f90.f90 | ||

| + | </pre> | ||

| + | |||

| + | to get the original version of the program back, if you get into a pickle. | ||

| + | |||

| + | The line: | ||

| + | |||

| + | <pre> | ||

| + | !$omp barrier | ||

| + | </pre> | ||

| + | |||

| + | Specifies that all threads must reach this point before going on. A kind of catch-up point. | ||

| + | |||

| + | Look inside the '''Makefile''' and notice that the use of OpenMP has been flagged to the compiler. '''-fopenmp''' in this case, as we are using the GNU compiler, '''gfortran'''. (It would be just '''-openmp''' if you were using '''ifort''', say.) If you were to try to compile the code without this flag, you'd get a compile-time error. | ||

| + | |||

| + | If you prefer C, there's a version of the program for you in '''omp_hello_c.c'''. All of the general comments above hold for this example too. You'll notice the use of: | ||

| + | |||

| + | <pre> | ||

| + | #include <omp.h> | ||

| + | </pre> | ||

| − | + | (instead of '''use omp_lib''') | |

| − | + | and statements of the form: | |

| + | |||

| + | <pre> | ||

| + | #pragma omp ... | ||

| + | </pre> | ||

| + | |||

| + | for the OpenMP instructions. | ||

=Work Sharing inside Loops= | =Work Sharing inside Loops= | ||

| − | + | Great. We can run some code in parallel and it wasn't too tricky, right? As fun as 'hello, world' always is, the real benefits appear when we can divide up a task between different processors and let them get on with some real work in parallel. That way the program will run faster overall. In OpenMP-speak, this is '''worksharing'''. Let's look at a straightforward example: | |

<pre> | <pre> | ||

| Line 93: | Line 130: | ||

</pre> | </pre> | ||

| − | Again we have C and Fortran90 versions of the same program. Take a look inside ''' | + | Again we have C and Fortran90 versions of the same program. Take a look inside '''simple_f90.f90'''. Here we see that we have a parallel section and each thread enquires of it's ID and the master enquires of the total number of threads. All as per example1. Notice this time, however, that some variables are marked as shared. In particular, the array '''a'''. Inside the parallel section, we have a do loop preceeded by the comment: |

| + | |||

| + | <pre> | ||

| + | !$omp do | ||

| + | </pre> | ||

| + | |||

| + | This statement is the key to the worksharing. It means that iterations from the '''do''' loop will be farmed out to different processors and performed in parallel. The easiest way to see what is going on is to run the program: | ||

| + | |||

| + | <pre> | ||

| + | make | ||

| + | ./simple_f90.f90 | ||

| + | </pre> | ||

| + | |||

| + | We can see that the iterations from the loop were '''chunked''' and that one processor placed values into a block of cells in the array (<tt>a</tt>) and that another processor was responsible for another block. | ||

| + | |||

| + | In addition to this default behaviour, OpenMP provides us with a number of keywords so that we may exert some finer grain control on the worksharing. To see an example of this take a look inside ''''schedule_f90.f90'''. Here we see: | ||

<pre> | <pre> | ||

| Line 99: | Line 151: | ||

</pre> | </pre> | ||

| − | + | and that we have previously set the value of '''chunk''' to 10. The '''static''' keyword indicates that each chunk of 10 contiguous iterations will be farmed out to each thread in turn--round-robin fashion. Since we are initialising the values in the array in the loop, all threads need access to the same array (<tt>a</tt>) and that's why it is marked as '''shared'''. | |

| − | + | There are a number of different scheduling keywords we can use. Try replacing '''static''' with '''dynamic'''. In this case chunks are handed out to processors as they become available. You may see a certain (quiet) processor get used several times and so all the processors may not be called upon in the workshare. Try changing the chunk size too. Go on, make a mess! It's the best way to learn:) | |

| − | =Reductions= | + | =Cache Coherency & Reductions= |

| − | A reduction combines elements from the different threads to form a single result. | + | A reduction combines elements from the different threads to form a single result. This is something that we might like to do quite often. For example, reductions can be summations or products. Let's look at the next example: |

<pre> | <pre> | ||

| Line 111: | Line 163: | ||

</pre> | </pre> | ||

| − | + | Here we'll look at an iterative algorithm to compute a value for '''pi''' and we'll see how we can speed this up by spreading the iteration over several processors. As normal, there are C and Fortran90 examples that we can look at. | |

| − | Note that in both the '''reduction_''' programs we explicitly control the number of threads that we want OpenMP to use (set to 4 initially) | + | Note that in both the '''reduction_''' programs we explicitly control the number of threads that we want OpenMP to use (set to 4 initially) with a call to: |

<pre> | <pre> | ||

| Line 127: | Line 179: | ||

</pre> | </pre> | ||

| − | We have a similar comment line in the Fortran code. | + | We have a similar comment line in the Fortran code. Note in this case that the '''parallel do''' statement has a corresponding '''end parallel do''': |

<pre> | <pre> | ||

| Line 134: | Line 186: | ||

!$omp end parallel do | !$omp end parallel do | ||

</pre> | </pre> | ||

| + | |||

| + | The C-code example has only the: | ||

| + | |||

| + | <pre> | ||

| + | #pragma omp parallel for ... | ||

| + | </pre> | ||

| + | |||

| + | since the '''for''' block is structured and hence the end of the block is given by the syntax. | ||

In both cases we see that '''sum''' is indicated as the '''reduction variable''', and that '''x''' is kept private to each thread. | In both cases we see that '''sum''' is indicated as the '''reduction variable''', and that '''x''' is kept private to each thread. | ||

| − | Now compile and run the programs, timing them as | + | Now compile and run the serial and parallel programs, timing them as they go: |

<pre> | <pre> | ||

| Line 145: | Line 205: | ||

</pre> | </pre> | ||

| − | Note how much quicker the parallel code runs. You could try changing the number of threads that we utilise (up to a maximum of 8 | + | Note how much quicker the parallel code runs. You could try changing the number of threads that we utilise (probably up to a maximum of 8, since the hardware most likely has a maximum of 8 processor-cores). Recompile using '''make''', and re-time the runs. |

| + | |||

| + | You can also time, e.g. '''parallel_pi_c.exe'''. Notice how much slower this is than the reduction version. The reason for this is that in the reduction case, each thread updates a private copy of '''sum''' and these private varaibles are combined (using the specified reduction operator) into a single value at the end of the parallel section. In the naive case, each thread is constantly updating a single shared value. A copy of this variable will be kept in cache for each thread and caches for threads running on different processors will need to be kept up-to-date. This is the cache coherency problem and it is a significant issue for shared memory parallelism. | ||

| + | |||

| + | ==Exercises== | ||

| + | |||

| + | * Write 2 programs using pthreads; one which employs a reduction and one which does not, and use them to highlight the cache coherency problem. | ||

| + | |||

| + | =Matrix Multiplication= | ||

| + | |||

| + | The previous examples have been useful in highlighting the key features that OpenMP offers, but have been very simple. Let's move onto a more realistic task. Matrix multiplication is a good candidate for parallelisation: | ||

| + | |||

| + | <pre> | ||

| + | cd ../example4 | ||

| + | </pre> | ||

| + | |||

| + | Again, let's compile the code and time the serial and parallel versions: | ||

| + | |||

| + | <pre> | ||

| + | make | ||

| + | time ./serial_mm_fort.exe | ||

| + | time ./omp_mm_fort.exe | ||

| + | </pre> | ||

| + | |||

| + | If you look inside '''serial_mm_fort.f90''' you will see that after the initialisations have been done, the work of the matrix multiplication is done by three nested loops: | ||

| + | |||

| + | <pre> | ||

| + | do ii=1, nra | ||

| + | do jj=1, ncb | ||

| + | do kk=1, nca | ||

| + | c(ii,jj) = c(ii,jj) + a(ii,kk) * b(kk,jj) | ||

| + | end do | ||

| + | end do | ||

| + | end do | ||

| + | </pre> | ||

| + | |||

| + | In the OpenMP version, this is modified to: | ||

| + | |||

| + | <pre> | ||

| + | !$omp do schedule(static, chunk) | ||

| + | do ii=1, nra | ||

| + | print *, 'thread', tid, 'did row', ii | ||

| + | do jj=1, ncb | ||

| + | do kk=1, nca | ||

| + | c(ii,jj) = c(ii,jj) + a(ii,kk) * b(kk,jj) | ||

| + | end do | ||

| + | end do | ||

| + | end do | ||

| + | </pre> | ||

| + | |||

| + | If you look carefully at the output, you will see that each processor does a chunk (set to 10) of the iterations of the outer loop (which has the index <tt>ii</tt>). The inner loops are done by the same processor. | ||

| + | |||

| + | '''Homework''': Given our knowledge of row-major order and column-major order storage of 2-dimensional arrays, form the first section above, is there a better way to arrange the loops, and hence worksharing, for the C and Fortran examples? | ||

| + | |||

| + | =Some More Features= | ||

| + | |||

| + | In this section, I'll list some more useful features offered by OpneMP, but without example code to go with them, since I reckon you're getting the hang of things now:) I'll use the Fortran syntax for my descriptions, but the corresponding C constructs are as you would expect them to be. | ||

| + | |||

| + | Only one thread in the team will execute the enclosed block of code: | ||

| + | |||

| + | <pre> | ||

| + | !$omp single | ||

| + | ... | ||

| + | !$omp end single | ||

| + | </pre> | ||

| + | |||

| + | Only the master thread will execute the enclosed code: | ||

| + | |||

| + | <pre> | ||

| + | !$omp master | ||

| + | ... | ||

| + | !$omp end master | ||

| + | </pre> | ||

| + | |||

| + | Only one thread at a time will execute the block (for 'non-thread-safe' code): | ||

| + | |||

| + | <pre> | ||

| + | !$omp critical | ||

| + | ... | ||

| + | !$omp end critical | ||

| + | </pre> | ||

| + | |||

| + | An interesting construct that will ensure that the loop iterations are done in the same order as would be done by the corresponding piece of serial code: | ||

| + | |||

| + | <pre> | ||

| + | !$omp do ordered | ||

| + | ...loop region... | ||

| + | !$omp ordered | ||

| + | ... | ||

| + | !$omp end ordered | ||

| + | ...end of loop region... | ||

| + | !$omp end do | ||

| + | </pre> | ||

| + | |||

| + | =A More Realistic Example= | ||

| + | |||

| + | <pre> | ||

| + | cd ../example5 | ||

| + | make | ||

| + | </pre> | ||

| + | |||

| + | In this example we will look at a simple model of a river and floodplain. The model is initialised with a grid of ground and water levels. Then, at each time step, the model calculates where the water should flow to. Let's run the model: | ||

| + | |||

| + | <pre> | ||

| + | ./lisflood | ||

| + | </pre> | ||

| + | |||

| + | The model will tell you little of what it's done, but will tell you how long it took to run, e.g.: | ||

| + | |||

| + | <pre> | ||

| + | Total computation time: 0.80 mins | ||

| + | </pre> | ||

| + | |||

| + | You can vary the number of processor-cores that OpenMP uses by setting the environment variable: | ||

| + | |||

| + | <pre> | ||

| + | export OMP_NUM_THREADS=1 | ||

| + | </pre> | ||

| + | |||

| + | This assumes the '''bash''' shell. For the csh (run '''echo $SHELL''' if you are unsure which shell you are using) you will need to type <tt>setenv OMP_NUM_THREADS 1</tt>. | ||

| + | |||

| + | Running the model on a quiet computer I got the following results: | ||

| + | |||

| + | [[Image:Lisflood-timings.JPG|600px|thumbnail|center|timings against number of cores used for processing]] | ||

| + | |||

| + | These points show that blindly adding more processors (beyond 4) can slow the overall program down. As ever in parallel programming, we have a trade-of between work-sharing and the overheads in marshalling your team of processors. (Note that on a busy machine, you may see a different profile, since the program may need to wait to access an already busy processor.) | ||

| + | |||

| + | You can experiment further with the notion of '''load-balancing''' by changing the initial conditions for the model: | ||

| + | |||

| + | <pre> | ||

| + | rm dem.asc | ||

| + | ln -s dem-dry.asc | ||

| + | </pre> | ||

| + | |||

| + | By typing the above, we have created a drier enviroment for the model. Some (actually many!) grid cells will now contain no water and so there is little work to be done for them. In this case, the overheads for more threads will not balance favourably with the work done by each thread. Try varying the number of processors used this time and see how your graph differs to the one created for the wetter environment. | ||

| + | |||

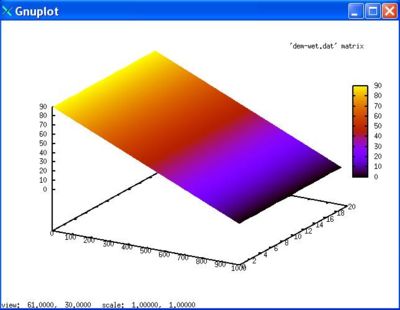

| + | If you are interested in visualising the data input and output by the model, you can use gnuplot after removing the first 6 lines of a file. For example, if you were to open <tt>dem-wet.asc</tt> and save it as <tt>dem-wet.dat</tt> after removing the header information, you can plot the data using: | ||

| + | |||

| + | <pre> | ||

| + | gnuplot | ||

| + | set pm3d | ||

| + | splot 'dem-wet.dat' matrix with pm3d | ||

| + | </pre> | ||

| + | |||

| + | and you will see a simple slope: | ||

| + | |||

| + | [[Image:Dem-wet.JPG|400px|thumbnail|center|domain of the model - water runs down the slope]] | ||

| + | |||

| + | =To Go Further= | ||

| − | + | A number of tutorials and example programs using OpenMP can be found by searching the Web. A few good ones that I've seen include: | |

| − | + | * [https://computing.llnl.gov/tutorials/openMP A comprehensive tutorial from Lawrence Livermore National Lab] | |

| + | * [http://www.cs.trinity.edu/~bmassing/CS3366/SamplePrograms/index.html Comparative serial and OpenMP programs progressing to, e.g. one to solve the heat diffusion equation] | ||

Latest revision as of 09:26, 5 September 2013

'OpenMP: Shared memory parallism'

Introduction

Computers. They're handy right? Email, buying stuff from Amazon.. You could even write a program. Create your own application. Indeed, for people of a certain mindset--people like you and me--we can do all sorts of interesting things like simulating the natural world, and in the process look at questions like, "will Greenland melt if we carry on polluting the atmosphere as we are?" and "what will be the consequences if it does melt?".

Sometimes it's handy to have more than one computer. Let's say that we have a new whizz-bang weather model that takes 26 hours to work out what the weather will do tomorrow. "Very nice. Superb accuracy," you say, "but, alas, about as much use as a chocolate teapot." In order for the model to be useful in the real world, we need it to run faster. We need to divide up the work it does and run it over two, or more computers. We need to enter the world of parallel programming.

"Hippee!" we cry, but a word of caution. Getting models to work reliably in parallel is a lot--I'll say it again--a lot harder than getting them to work on a single processor. Before setting out down the road, it is well worth checking that you really do need your model to run faster, and that you've explored all other avenues to speed up your model before embarking on a parallelisation project.

Still keen? OK, let's get stuck in.

A Necessary Aside

We'll look at some parallel code in a moment--I promise--but before we do we must take a look at some simple serial code and ask ourselves whether we've been as smart as we can and made the code run as fast as it can. I know you're keen to crack on, but trust me, it's really important to make sure that your serial code optimised before converting it into parallel code. If you don't, any inefficiencies in your code are just going to get magnified as it is run on several processors at the same time, and that's not what you want. With that in mind, be sure to look at the course on performance analysis before starting on any parallel programming endeavours. Indeed, if you are lucky, you may find that your newly optimised code has been sped-up sufficiently and you don't need to do anything else to it!

OpenMP

There are a number of different ways to create parallel programs, and we're going to start with one approach, called OpenMP. There a number of reasons for this:

- It's widely available,

- It's good for the muli-core processors that we find in a lot of computers these days,

- It's fairly easy to use,

- and it's based upon the not-so-minde-bending concept of threads

At this point, we could launch overselves into a long and detailed discussion of threads, the OpenMP runtime environment, pre-processor macro statements and the like. But we won't. Because it's less fun. Let's just try an example instead.

OK, to get the examples, login to a Linux box and cut & paste the below onto the command line:

svn co https://svn.ggy.bris.ac.uk/subversion-open/openmp/trunk ./openmp

Hello, world

Once you have the examples, do the following:

cd openmp cd examples/example1

You may have a number of compilers at your disposal. The makefile can handle GNU, PGI and INTEL. In order to compile using the Intel compiler, for example, type:

make COMP=INTEL

Once compiled, you can run the program:

./hello_f90.exe

(Note that if you are using PGI, you will need to set an enviromnent variable from the outset: export OMP_NUM_THREADS=4. More of that later..)

Tada! Just like the old classic 'hello, world', but this time run in parallel on as many processors as you have available on your machine. Good eh? Now, how did we do that?

Take a look inside the file omp_hello_f90.f90. First up we have used a Fortran90 module containing routines specific to OpenMP:

use omp_lib

This gives us access to routines like:

omp_get_num_threads()

and

omp_get_thread_num()

The rest of the program is straightforward Fortran code, except for some comment lines starting with !$omp, such as:

!$omp parallel private(nthreads, tid) ... !$omp end parallel

These lines specify that the master thread should fork a parallel region. From the code, you will see that all the threads on the team will get their thread ID--through calls to the OpenMP library--and will print it. The master thread will also ask how many threads have been spawned by the OpenMP runtime environment, and will print the total.

Notice that the variables nthreads and tid have been marked as private. This means that seperate copies of the these variables will be kept for each thread. This is essential, or else the print statement, 'Hello, world from thread = ' would get all mixed up, right? Try deliberatly mucking things up. Go on, see what happens if you delete tid from the private list. You can always use, e.g.:

svn revert omp_hello_f90.f90

to get the original version of the program back, if you get into a pickle.

The line:

!$omp barrier

Specifies that all threads must reach this point before going on. A kind of catch-up point.

Look inside the Makefile and notice that the use of OpenMP has been flagged to the compiler. -fopenmp in this case, as we are using the GNU compiler, gfortran. (It would be just -openmp if you were using ifort, say.) If you were to try to compile the code without this flag, you'd get a compile-time error.

If you prefer C, there's a version of the program for you in omp_hello_c.c. All of the general comments above hold for this example too. You'll notice the use of:

#include <omp.h>

(instead of use omp_lib)

and statements of the form:

#pragma omp ...

for the OpenMP instructions.

Work Sharing inside Loops

Great. We can run some code in parallel and it wasn't too tricky, right? As fun as 'hello, world' always is, the real benefits appear when we can divide up a task between different processors and let them get on with some real work in parallel. That way the program will run faster overall. In OpenMP-speak, this is worksharing. Let's look at a straightforward example:

cd ../example2

Again we have C and Fortran90 versions of the same program. Take a look inside simple_f90.f90. Here we see that we have a parallel section and each thread enquires of it's ID and the master enquires of the total number of threads. All as per example1. Notice this time, however, that some variables are marked as shared. In particular, the array a. Inside the parallel section, we have a do loop preceeded by the comment:

!$omp do

This statement is the key to the worksharing. It means that iterations from the do loop will be farmed out to different processors and performed in parallel. The easiest way to see what is going on is to run the program:

make ./simple_f90.f90

We can see that the iterations from the loop were chunked and that one processor placed values into a block of cells in the array (a) and that another processor was responsible for another block.

In addition to this default behaviour, OpenMP provides us with a number of keywords so that we may exert some finer grain control on the worksharing. To see an example of this take a look inside 'schedule_f90.f90. Here we see:

!$omp do schedule(static,chunk)

and that we have previously set the value of chunk to 10. The static keyword indicates that each chunk of 10 contiguous iterations will be farmed out to each thread in turn--round-robin fashion. Since we are initialising the values in the array in the loop, all threads need access to the same array (a) and that's why it is marked as shared.

There are a number of different scheduling keywords we can use. Try replacing static with dynamic. In this case chunks are handed out to processors as they become available. You may see a certain (quiet) processor get used several times and so all the processors may not be called upon in the workshare. Try changing the chunk size too. Go on, make a mess! It's the best way to learn:)

Cache Coherency & Reductions

A reduction combines elements from the different threads to form a single result. This is something that we might like to do quite often. For example, reductions can be summations or products. Let's look at the next example:

cd ../example3

Here we'll look at an iterative algorithm to compute a value for pi and we'll see how we can speed this up by spreading the iteration over several processors. As normal, there are C and Fortran90 examples that we can look at.

Note that in both the reduction_ programs we explicitly control the number of threads that we want OpenMP to use (set to 4 initially) with a call to:

omp_set_num_threads(NUM_THREADS)

(A function call in C and a subroutine call in Fortran.)

In the C code, we flag the parallel reduction using the pragma statement:

#pragma omp parallel for reduction(+:sum) private(x)

We have a similar comment line in the Fortran code. Note in this case that the parallel do statement has a corresponding end parallel do:

!$omp parallel do reduction(+:sum) private(x) ... !$omp end parallel do

The C-code example has only the:

#pragma omp parallel for ...

since the for block is structured and hence the end of the block is given by the syntax.

In both cases we see that sum is indicated as the reduction variable, and that x is kept private to each thread.

Now compile and run the serial and parallel programs, timing them as they go:

make time ./serial_pi_f.exe time ./reduction_pi_f.exe

Note how much quicker the parallel code runs. You could try changing the number of threads that we utilise (probably up to a maximum of 8, since the hardware most likely has a maximum of 8 processor-cores). Recompile using make, and re-time the runs.

You can also time, e.g. parallel_pi_c.exe. Notice how much slower this is than the reduction version. The reason for this is that in the reduction case, each thread updates a private copy of sum and these private varaibles are combined (using the specified reduction operator) into a single value at the end of the parallel section. In the naive case, each thread is constantly updating a single shared value. A copy of this variable will be kept in cache for each thread and caches for threads running on different processors will need to be kept up-to-date. This is the cache coherency problem and it is a significant issue for shared memory parallelism.

Exercises

- Write 2 programs using pthreads; one which employs a reduction and one which does not, and use them to highlight the cache coherency problem.

Matrix Multiplication

The previous examples have been useful in highlighting the key features that OpenMP offers, but have been very simple. Let's move onto a more realistic task. Matrix multiplication is a good candidate for parallelisation:

cd ../example4

Again, let's compile the code and time the serial and parallel versions:

make time ./serial_mm_fort.exe time ./omp_mm_fort.exe

If you look inside serial_mm_fort.f90 you will see that after the initialisations have been done, the work of the matrix multiplication is done by three nested loops:

do ii=1, nra

do jj=1, ncb

do kk=1, nca

c(ii,jj) = c(ii,jj) + a(ii,kk) * b(kk,jj)

end do

end do

end do

In the OpenMP version, this is modified to:

!$omp do schedule(static, chunk)

do ii=1, nra

print *, 'thread', tid, 'did row', ii

do jj=1, ncb

do kk=1, nca

c(ii,jj) = c(ii,jj) + a(ii,kk) * b(kk,jj)

end do

end do

end do

If you look carefully at the output, you will see that each processor does a chunk (set to 10) of the iterations of the outer loop (which has the index ii). The inner loops are done by the same processor.

Homework: Given our knowledge of row-major order and column-major order storage of 2-dimensional arrays, form the first section above, is there a better way to arrange the loops, and hence worksharing, for the C and Fortran examples?

Some More Features

In this section, I'll list some more useful features offered by OpneMP, but without example code to go with them, since I reckon you're getting the hang of things now:) I'll use the Fortran syntax for my descriptions, but the corresponding C constructs are as you would expect them to be.

Only one thread in the team will execute the enclosed block of code:

!$omp single ... !$omp end single

Only the master thread will execute the enclosed code:

!$omp master ... !$omp end master

Only one thread at a time will execute the block (for 'non-thread-safe' code):

!$omp critical ... !$omp end critical

An interesting construct that will ensure that the loop iterations are done in the same order as would be done by the corresponding piece of serial code:

!$omp do ordered ...loop region... !$omp ordered ... !$omp end ordered ...end of loop region... !$omp end do

A More Realistic Example

cd ../example5 make

In this example we will look at a simple model of a river and floodplain. The model is initialised with a grid of ground and water levels. Then, at each time step, the model calculates where the water should flow to. Let's run the model:

./lisflood

The model will tell you little of what it's done, but will tell you how long it took to run, e.g.:

Total computation time: 0.80 mins

You can vary the number of processor-cores that OpenMP uses by setting the environment variable:

export OMP_NUM_THREADS=1

This assumes the bash shell. For the csh (run echo $SHELL if you are unsure which shell you are using) you will need to type setenv OMP_NUM_THREADS 1.

Running the model on a quiet computer I got the following results:

These points show that blindly adding more processors (beyond 4) can slow the overall program down. As ever in parallel programming, we have a trade-of between work-sharing and the overheads in marshalling your team of processors. (Note that on a busy machine, you may see a different profile, since the program may need to wait to access an already busy processor.)

You can experiment further with the notion of load-balancing by changing the initial conditions for the model:

rm dem.asc ln -s dem-dry.asc

By typing the above, we have created a drier enviroment for the model. Some (actually many!) grid cells will now contain no water and so there is little work to be done for them. In this case, the overheads for more threads will not balance favourably with the work done by each thread. Try varying the number of processors used this time and see how your graph differs to the one created for the wetter environment.

If you are interested in visualising the data input and output by the model, you can use gnuplot after removing the first 6 lines of a file. For example, if you were to open dem-wet.asc and save it as dem-wet.dat after removing the header information, you can plot the data using:

gnuplot set pm3d splot 'dem-wet.dat' matrix with pm3d

and you will see a simple slope:

To Go Further

A number of tutorials and example programs using OpenMP can be found by searching the Web. A few good ones that I've seen include: